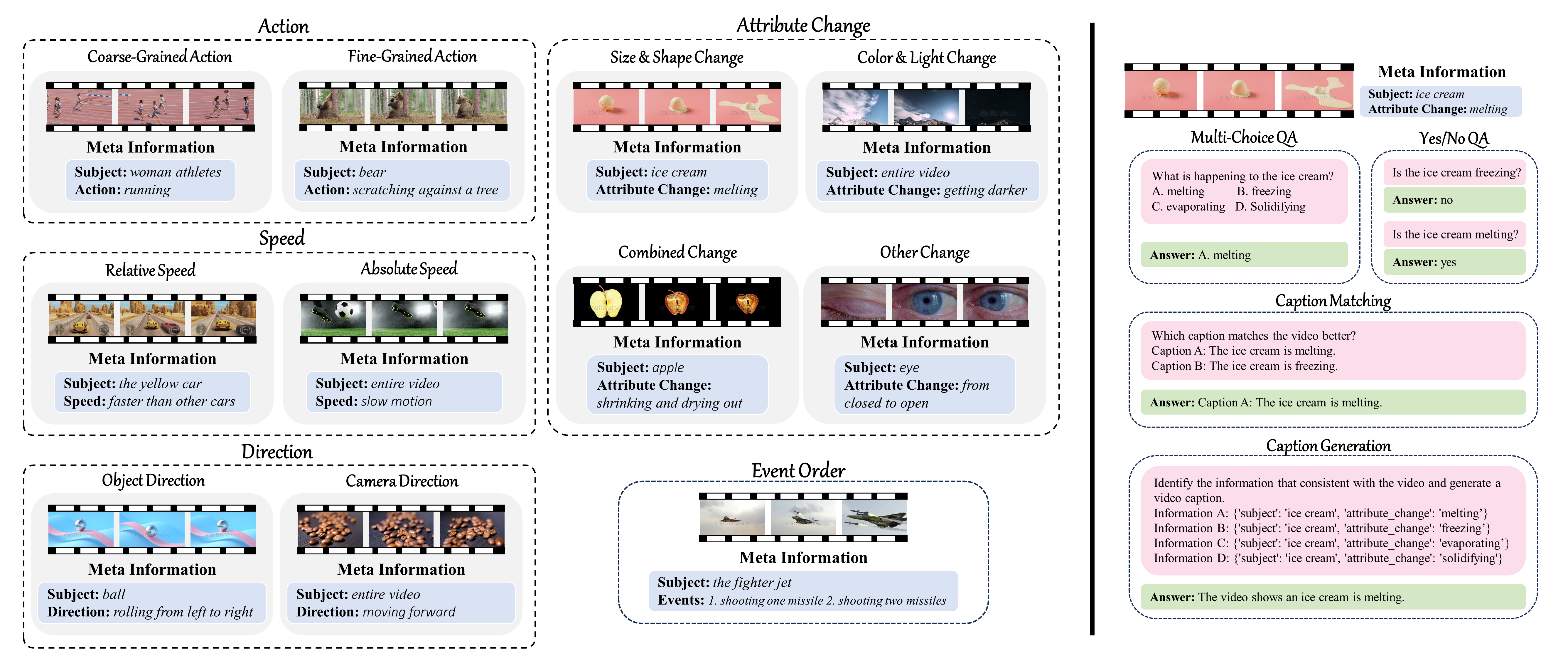

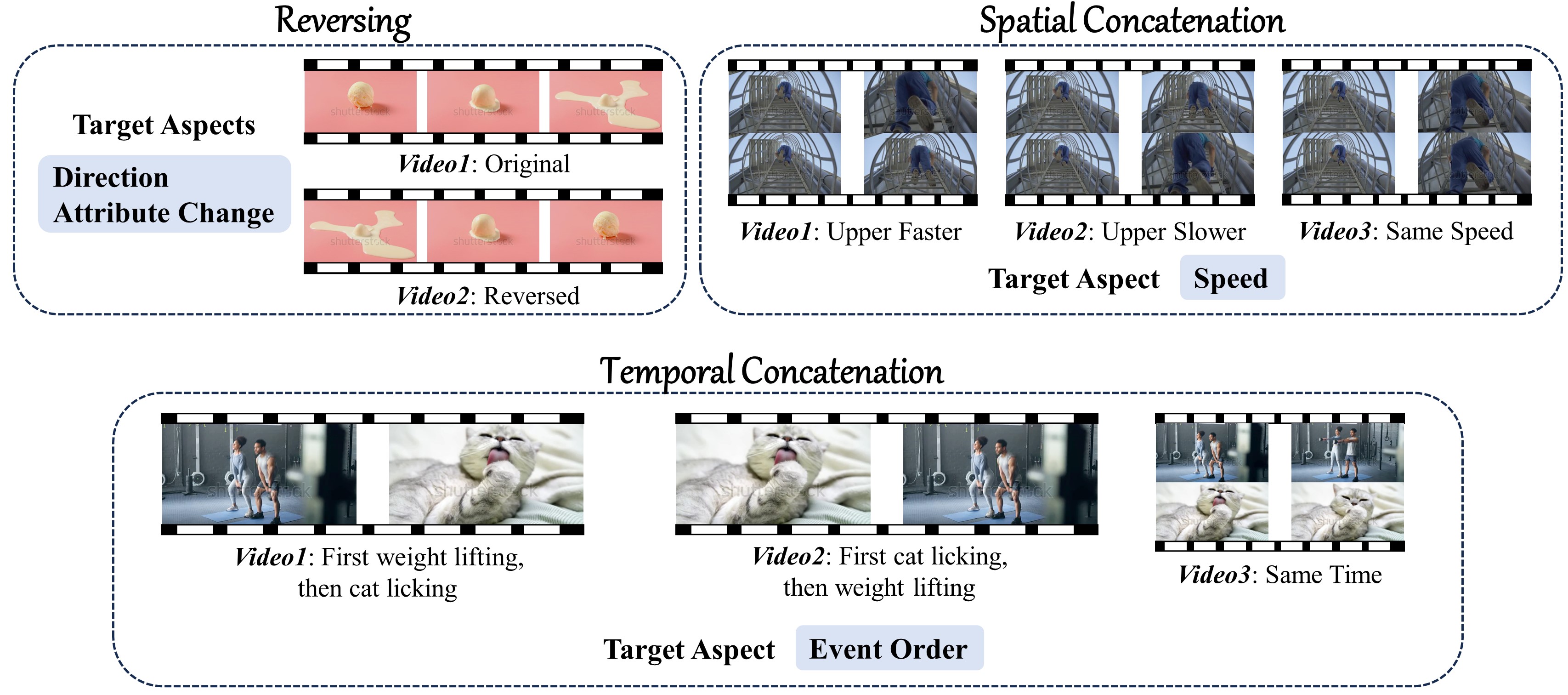

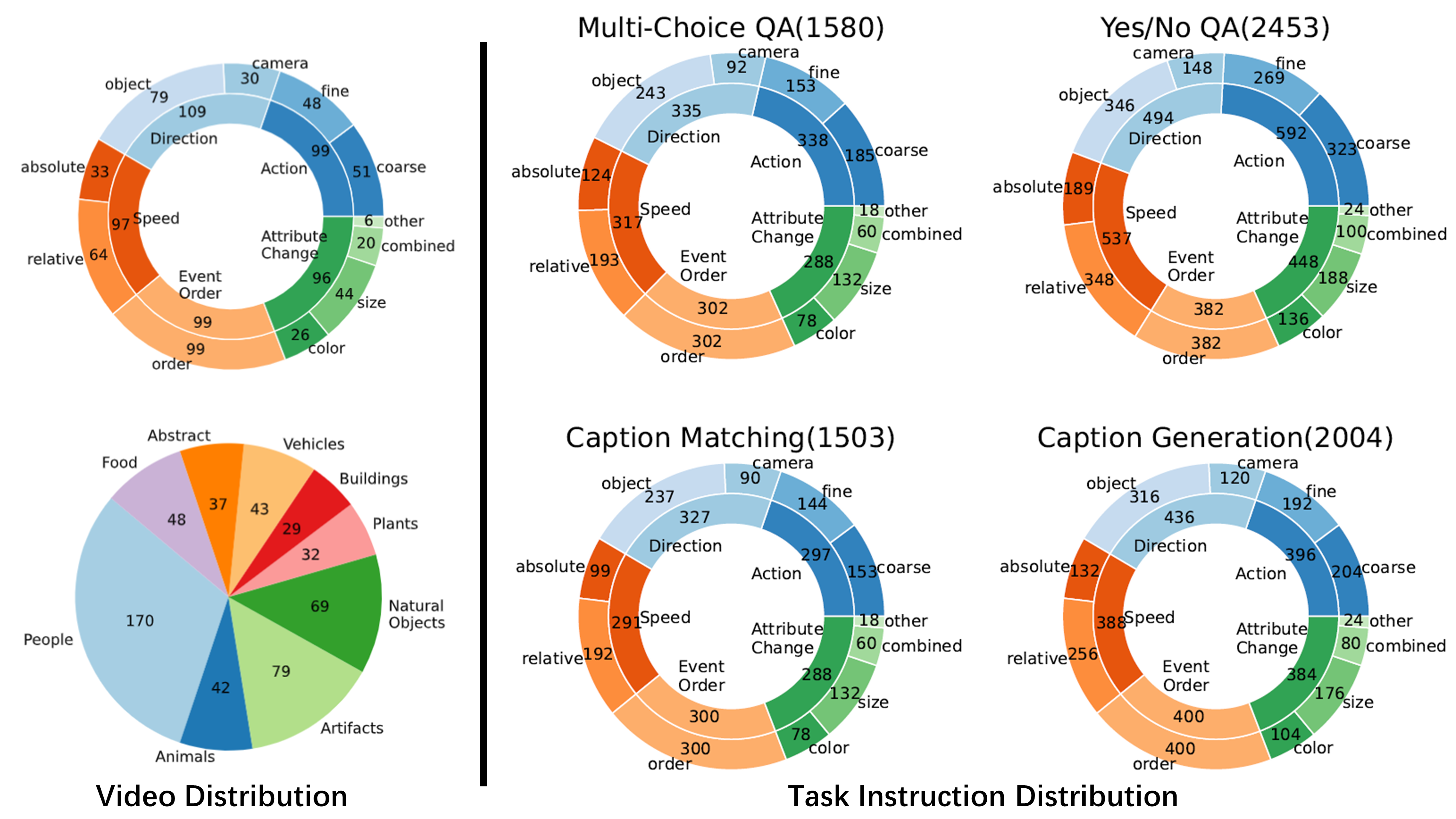

Recently, there is a surge in interest surrounding video large language models (Video LLMs). However, existing benchmarks fail to provide a comprehensive feedback on the temporal perception ability of Video LLMs. On the one hand, most of them are unable to distinguish between different temporal aspects (e.g., speed, direction) and thus cannot reflect the nuanced performance on these specific aspects. On the other hand, they are limited in the diversity of task formats (e.g., only multi-choice QA), which hinders the understanding of how temporal perception performance may vary across different types of tasks. Motivated by these two problems, we propose the TempCompass benchmark, which introduces a diversity of temporal aspects and task formats. To collect high-quality test data, we devise two novel strategies: (1) In video collection, we construct conflicting videos that share the same static content but differ in a specific temporal aspect, which prevents Video LLMs from leveraging single-frame bias or language priors. (2) To collect the task instructions, we propose a paradigm where humans first annotate meta-information for a video and then an LLM generates the instruction. We also design an LLM-based approach to automatically and accurately evaluate the responses from Video LLMs. Based on TempCompass, we comprehensively evaluate 8 state-of-the-art (SOTA) Video LLMs and 3 Image LLMs, and reveal the discerning fact that these models exhibit notably poor temporal perception ability.

Highlights

Highlights

Can your Video LLM correctly answer the following question for both two videos?

Can your Video LLM correctly answer the following question for both two videos?

What is happening in the video?

A. A person drops down the pineapple

B. A person pushes forward the pineapple

C. A person rotates the pineapple

D. A person picks up the pineapple

Data

Data

Evaluation

Evaluation

@article{liu2024tempcompass,

title = {TempCompass: Do Video LLMs Really Understand Videos?},

author = {Yuanxin Liu and Shicheng Li and Yi Liu and Yuxiang Wang and Shuhuai Ren and Lei Li and Sishuo Chen and Xu Sun and Lu Hou},

year = {2024},

journal = {arXiv preprint arXiv: 2403.00476}

}

This website is adapted from Nerfies, LLaVA-RLHF and Silkie, licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Usage and License Notices: The data and code are intended and licensed for research use only. The dataset is CC BY NC 4.0 (allowing only non-commercial use).